我按照SuperMap iObjects for Spark 扩展模块(9.0.1 版本)帮助文档进行样例程序调试时,程序运行报错

14:13:21 INFO StandaloneSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

java.lang.UnsatisfiedLinkError: no WrapjGeo in java.library.path

at java.lang.ClassLoader.loadLibrary(ClassLoader.java:1867)

at java.lang.Runtime.loadLibrary0(Runtime.java:870)

at java.lang.System.loadLibrary(System.java:1122)

at com.supermap.data.Environment.LoadWrapJ(Unknown Source)

at com.supermap.data.Environment.<clinit>(Unknown Source)

at com.supermap.data.LicenseWrapInstance.findAndReadLicFile(Unknown Source)

at com.supermap.data.License.<init>(Unknown Source)

at com.supermap.bdt.base.Util$.<init>(Util.scala:56)

at com.supermap.bdt.base.Util$.<clinit>(Util.scala)

at com.supermap.bdt.io.simplejson.SimpleJsonReader$.read(SimpleJsonReader.scala:36)

at com.bzhcloud.spatial.process.TestData$.main(TestData.scala:14)

at com.bzhcloud.spatial.process.TestData.main(TestData.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:736)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:185)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:210)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:124)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Exception in thread "main" java.lang.UnsatisfiedLinkError: com.supermap.data.EnvironmentNative.jni_GetCurrentCulture()Ljava/lang/String;

at com.supermap.data.EnvironmentNative.jni_GetCurrentCulture(Native Method)

at com.supermap.data.Environment.getCurrentCulture(Unknown Source)

at com.supermap.data.Environment.<clinit>(Unknown Source)

at com.supermap.data.LicenseWrapInstance.findAndReadLicFile(Unknown Source)

at com.supermap.data.License.<init>(Unknown Source)

at com.supermap.bdt.base.Util$.<init>(Util.scala:56)

at com.supermap.bdt.base.Util$.<clinit>(Util.scala)

at com.supermap.bdt.io.simplejson.SimpleJsonReader$.read(SimpleJsonReader.scala:36)

at com.bzhcloud.spatial.process.TestData$.main(TestData.scala:14)

at com.bzhcloud.spatial.process.TestData.main(TestData.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:736)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:185)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:210)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:124)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

14:13:23 ERROR Inbox: Ignoring error

org.apache.spark.SparkException: Unsupported message RpcMessage(127.0.0.1:62152,RetrieveSparkAppConfig,org.apache.spark.rpc.netty.RemoteNettyRpcCallContext@5516934e) from 127.0.0.1:62152

at org.apache.spark.rpc.netty.Inbox$$anonfun$process$1$$anonfun$apply$mcV$sp$1.apply(Inbox.scala:106)

at org.apache.spark.rpc.netty.Inbox$$anonfun$process$1$$anonfun$apply$mcV$sp$1.apply(Inbox.scala:105)

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend$DriverEndpoint$$anonfun$receiveAndReply$1.applyOrElse(CoarseGrainedSchedulerBackend.scala:150)

at org.apache.spark.rpc.netty.Inbox$$anonfun$process$1.apply$mcV$sp(Inbox.scala:105)

at org.apache.spark.rpc.netty.Inbox.safelyCall(Inbox.scala:205)

at org.apache.spark.rpc.netty.Inbox.process(Inbox.scala:101)

at org.apache.spark.rpc.netty.Dispatcher$MessageLoop.run(Dispatcher.scala:213)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

关键信息是这个:

java.lang.UnsatisfiedLinkError: no WrapjGeo in java.library.path

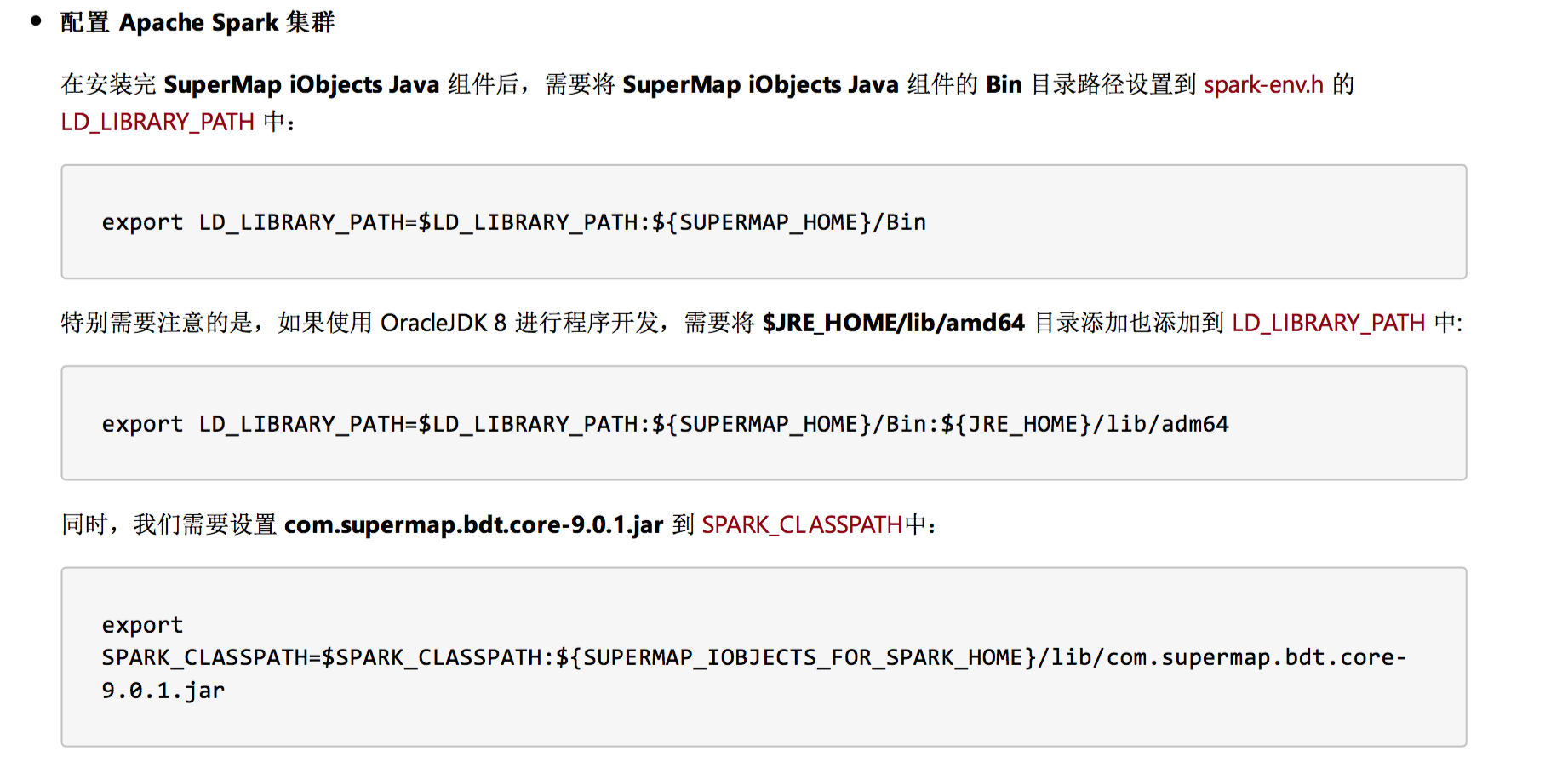

想请问下除开帮助文档提示的安装

这些之外是不是还有其他地方需要配置?

期待你的回复,谢谢!

热门文章

热门文章

热门文章

热门文章